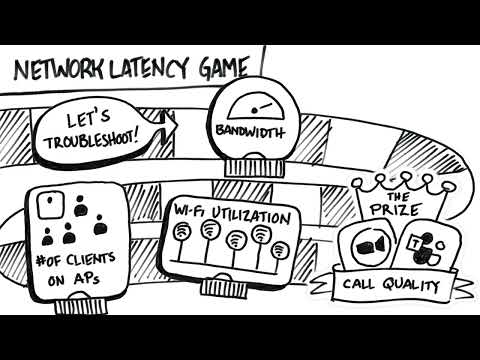

In this video, we dive into the world of Explainable AI with a focus on SHAP (SHapley Additive ExPlanations). AI models often lack transparency, making it challenging for users to understand their predictions. SHAP addresses this by providing detailed, visual explanations of model predictions, ensuring all features are equally weighted.

We explore the origins of Shapley values, their application in network latency troubleshooting, and how SHAP values offer unbiased, interpretable insights. Learn how SHAP enhances trust and integration of machine learning solutions, with practical examples from Juniper Networks, where SHAP helps explain video conferencing latency predictions for Zoom and Microsoft Teams.

Join us to understand how SHAP can make AI more transparent and trustworthy, leading to better user and operator experiences.

Resources:

https://www.juniper.net/us/en/the-feed/topics/ai-and-machine-learning/assured-video-experiences-with-zoom-integration.html

https://https//blogs.juniper.net/en-us/enterprise-cloud-and-transformation/marvis-learns-about-zoom-user-experiences-to-improve-network-performance