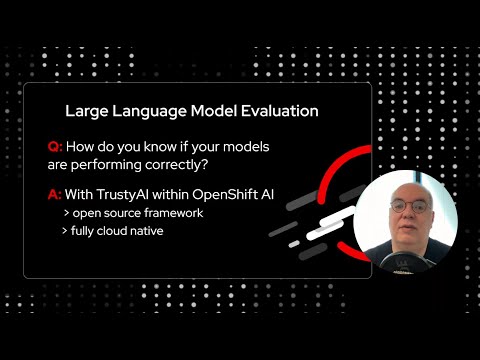

Before deploying your Large Language Models (LLMs) in production, it’s crucial to know if they are performing accurately. Going beyond basic checks, you need to understand if your models are performing correctly from a qualitative perspective. This is where the open source LM evaluation harness framework (LMEval) comes in. TrustyAI and Red Hat OpenShift AI integrate LMEval, making it cloud-native and allowing you to conduct systematic evaluation at scale within your production OpenShift environment. This provides objective, quantitative measurements on how your LLM models perform.

In this explanation video and demo, Rui Vieira, a principal software engineer in Red Hat’s AI division, describes the tool and shows how to use open source benchmarks like MMLU (Massive Multi-task Language Understanding). MMLU is a popular benchmark testing models across a wide range of knowledge domains and reasoning tasks. LMEval can help you gain objective, quantitative insights into model capabilities. This is essential for responsible AI deployment, understanding limitations, and preventing use in areas where models lack expertise. It can also help you evaluate critical aspects like toxicity using specialized benchmarks.

Time stamps:

0:00 – Introduction

0:42 – Basic Model Check vs. Systematic Evaluation

1:58 – Systematic Evaluation with TrustAI (LMEval)

2:50 – Orchestrating the Evaluation Job

3:18 – Understanding the Benchmarks (MMLU Overview)

4:27 – MMLU Structure & Scoring

5:00 – MMLU Example & Domain-Specific Knowledge

5:31 – Accessing Evaluation Results (Objective, Quantitative Insights)

6:32 – Interpreting Results & Importance for Responsible AI

7:23 – Evaluating Toxicity & Conclusion

Learn more: https://red.ht/AI

![[vLLM Office Hours #26] Intro to torch.compile and how it works with vLLM](https://videos.sebae.net/wp-content/uploads/2025/05/hqdefault-386.jpg)

![[Random Samples] Hopscotch: Discovering and Skipping Redundancies in Language Models](https://videos.sebae.net/wp-content/uploads/2025/08/hqdefault-211.jpg)