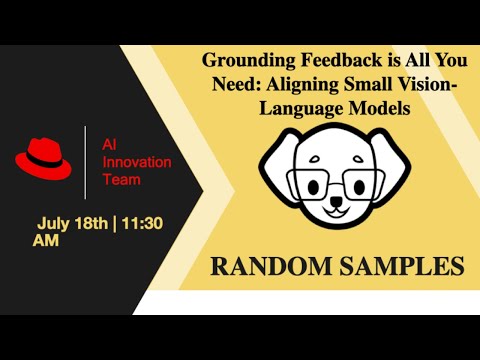

Random Samples is a weekly seminar series that bridges the gap between cutting-edge AI research and real-world application. Designed for AI developers, data scientists, and researchers, each episode explores the latest advancements in AI and how they’re being used in production today.

This week’s topic:

Grounding Feedback is All You Need: Aligning Small Vision-Language Models

Abstract:

While recent vision-language models (VLMs) excel at integrating visual and linguistic information, their performance hinges on vast quantities of curated image-text pairs. This reliance makes the alignment process both time-consuming and resource-intensive. In this talk, we’ll introduce Sampling-based Vision Projection (SVP), a novel framework that improves vision-language alignment using automated feedback and minimal human supervision. Our results show that SVP significantly enhances image captioning, improves object recall, and reduces hallucination, enabling smaller models to match the performance of much larger systems. This approach offers a promising path toward developing powerful, efficient, and accessible multimodal AI.

Subscribe to stay ahead of the curve with weekly deep dives into AI! New episodes drop every Friday.